Artificial Intelligence (AI) has brought transformative change to industries across the globe, and at its heart lie neural networks that power many modern applications. One essential concept behind the success of neural networks is the perceptron, a simple model that serves as the foundation for more sophisticated algorithms. Equally crucial to neural networks functioning are activation functions, which help determine how information is processed and used to make decisions. In this post, I will provide a clear, comprehensive breakdown of perceptrons and activation functions, explaining how they work and why they are vital to AI development. By the end, you’ll have a better understanding of how these components contribute to shaping AI models.

For those who have read my previous article, Deep Learning Frameworks and Libraries: The Powerhouses of AI Development, you’ll recognize that understanding the core components of AI is just as important as knowing the tools that enable it. This post will take the next logical step in helping you understand how deep learning frameworks leverage these components to create intelligent systems.

What is a Perceptron?

The perceptron is a fundamental building block of artificial neural networks and can be thought of as a single-layer neural network. The perceptron is a simple yet effective model that mimics the function of a biological neuron in the human brain. It is designed to solve binary classification problems, making it essential for tasks where the goal is to categorize data into one of two classes.

A perceptron receives several input values, each corresponding to a feature in a dataset. These inputs are multiplied by weights, which indicate the importance of each feature. The weighted inputs are then summed up and passed through an activation function to produce an output. If the output exceeds a threshold, the perceptron is activated; otherwise, it remains inactive.

Here’s a breakdown of how a perceptron works:

-

Input Layer: The perceptron receives input values, which can be thought of as features from a dataset (x1,x2,x3,…,xn)

-

Weights: Each input is multiplied by a weight (w1,w2,w3,…,wn), which signifies the importance of that particular feature.

-

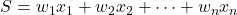

Summation: The inputs are weighted and summed together, which results in a weighted sum, often expressed as:

-

Thresholding: The sum is then compared against a threshold value. If the sum is greater than or equal to the threshold, the perceptron activates; otherwise, it does not activate.

-

Output: Based on the activation, the perceptron produces an output, which is typically binary (0 or 1), corresponding to the two classes in a binary classification task.

Perceptron Learning Rule

The perceptron is capable of learning from data, and this learning is governed by the perceptron learning rule. This rule adjusts the weights of the perceptron based on errors in its predictions, allowing it to improve over time.

The basic steps involved in the perceptron learning rule are:

-

Input and Prediction: The perceptron receives input and makes a prediction by applying the weights and summing them.

-

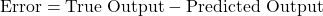

Error Calculation: The error is calculated by comparing the perceptron’s predicted output with the true output (the actual label).

-

Weight Adjustment: If the prediction is incorrect, the weights are adjusted according to the error. The adjustment is proportional to the error and the input values.

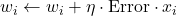

where η is the learning rate, a small constant that determines how much the weights are updated during each iteration.

-

Iteration: The process repeats for each training sample until the perceptron converges on an optimal set of weights that minimize errors.

While the perceptron is capable of learning linear decision boundaries, it struggles with more complex, non-linear tasks. This is where more advanced neural network models come into play, which can handle multi-layer architectures and more complex activation functions.

The Role of Activation Functions

Activation functions are critical to the functioning of neural networks. They decide whether a neuron should be activated based on the input received. These functions introduce non-linearity into the model, enabling the network to learn from complex patterns in data.

Without activation functions, a neural network would behave like a linear regression model, unable to learn complex decision boundaries. By introducing non-linearity, activation functions empower the network to model intricate patterns and perform well on tasks such as image recognition, natural language processing, and more.

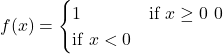

Here’s a table summarizing the key activation functions used in neural networks:

| Activation Function | Formula | Range | Key Characteristics |

|---|---|---|---|

| Step Function |  |

Binary (0, 1) | Simple binary output, non-differentiable |

| Sigmoid | (0, 1) | Smooth, differentiable, prone to vanishing gradient | |

| Tanh | (-1, 1) | Output centered around 0, prone to vanishing gradient | |

| ReLU | [0, ∞) | Computationally efficient, less prone to vanishing gradient | |

| Leaky ReLU | (-∞, ∞) | Addresses dying ReLU problem, small slope for negative values | |

| Softmax | (0, 1), sums to 1 | Used in multi-class classification, converts outputs into probabilities |

Why Perceptrons and Activation Functions Matter

Understanding the role of perceptrons and activation functions is essential for anyone working in AI. These two components are the foundation upon which more complex neural networks are built. Without perceptrons, there would be no basic mechanism for learning from data, and without activation functions, neural networks would not be able to learn non-linear relationships in data. Together, they enable AI systems to tackle a wide range of tasks, from recognizing images to processing natural language.

As AI continues to evolve, the importance of these fundamental components remains unchanged. The insights gained from studying perceptrons and activation functions will continue to shape the future of AI, ensuring that machine learning models can adapt to the increasing complexity of the tasks they are given.

Perceptrons and Activation Functions in Advanced AI Systems

Advanced AI systems like DeepSeek, ChatGPT, and Gemini leverage the concepts of perceptrons and activation functions in more sophisticated forms. For instance, ChatGPT relies on deep neural networks with multiple layers of perceptrons and activation functions to understand and generate human-like text. The model uses complex activation functions like ReLU and Softmax to process inputs and generate meaningful, context-aware responses.

DeepSeek, an advanced AI used for analyzing massive datasets, uses these core concepts to develop deep learning models capable of recognizing patterns in data, making predictions, and uncovering hidden insights. In these systems, perceptrons and activation functions help the AI handle vast amounts of data and find correlations that would be impossible for humans to detect.

Gemini, an AI system designed for real-time decision-making, uses advanced versions of perceptrons and activation functions to make rapid, intelligent decisions based on streaming data. These models process information through deep neural networks, where activation functions like Leaky ReLU and Softmax help the system adapt to changing environments.

In all these systems, the core concepts of perceptrons and activation functions are applied in advanced ways to process data and solve complex problems. The non-linearity introduced by activation functions allows these systems to learn from vast, varied datasets, making them highly efficient in tasks such as language processing, pattern recognition, and predictive analytics.

Conclusion

Perceptrons and activation functions are crucial building blocks in the world of AI. Understanding these components is key to appreciating how neural networks learn from data and make predictions. Whether you’re an AI architect, developer, or enthusiast, grasping the principles behind these elements will provide a solid foundation for further exploration of machine learning and deep learning models.

As AI continues to evolve, these core concepts will remain fundamental in shaping the intelligent systems of tomorrow. The powerful AI systems like DeepSeek, ChatGPT, and Gemini all depend on these basic principles to function effectively, making the study of perceptrons and activation functions essential for anyone looking to work with cutting-edge AI technology.

If you found this post insightful, feel free to check out my previous article on deep learning frameworks and libraries, which explores the tools and libraries used to build AI systems. As always, I encourage you to share your thoughts and questions in the comments section below. Let’s continue the conversation on AI and machine learning!

One thought on “Perceptron and Activation Functions: The Building Blocks of Deep Learning”