In today’s AI-enabled enterprise, one of the biggest challenges is enabling large language models (LLMs) and other AI agents to meaningfully interact with real business systems, databases, APIs, file stores, legacy applications, and more—without reinventing custom point-to-point integrations each time.

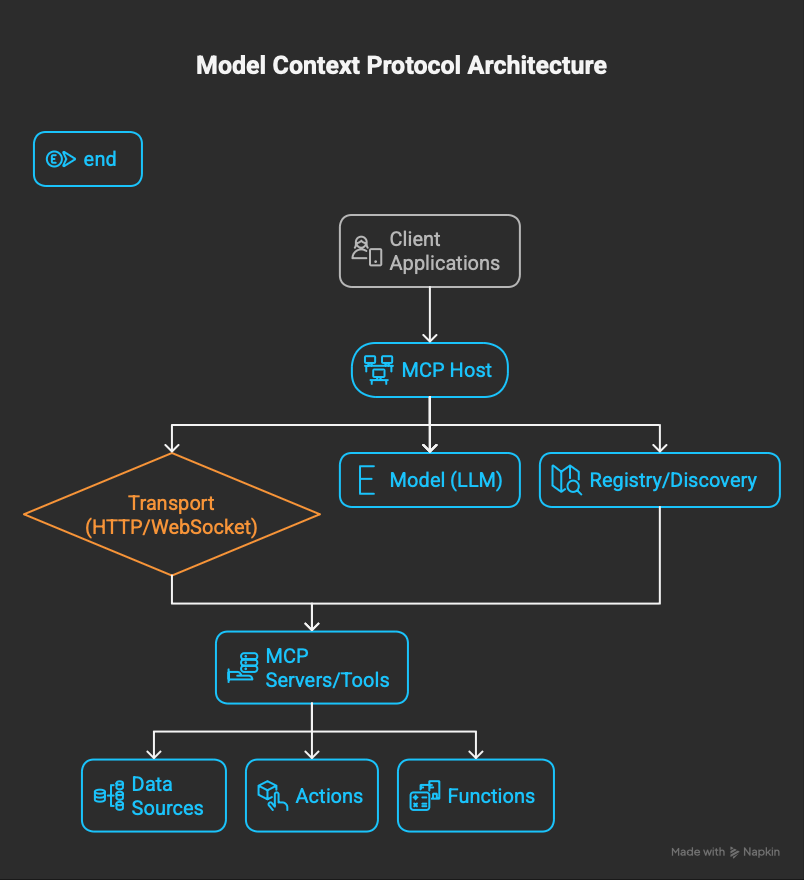

The Model Context Protocol (MCP), introduced in late 2024, addresses this by defining a uniform client–server architecture for AI systems. The MCP server is the endpoint that exposes tools, resources, and prompts to AI clients, allowing them to interact safely and intelligently with enterprise systems.

For business executives, MCP servers enable a new class of context-aware, tool-capable AI agents that go beyond conversation and perform actionable tasks in workflows—securely, auditable, and at scale.

What Is an MCP Server?

At a technical level, an MCP server:

- Exposes capabilities such as data access, tools, and workflows to AI clients.

- Operates using a host → client → server pattern:

- Host: the AI application or runtime environment.

- Client: the embedded connector that speaks the MCP protocol.

- Server: the endpoint that delivers data and executes tools.

- Communicates using JSON-RPC 2.0 over STDIO (for local) or HTTP+SSE (for remote streaming).

This standardization allows any compliant MCP client to connect to multiple servers seamlessly—eliminating bespoke integrations and reducing operational friction.

Architecture & Key Components

Capability Discovery

When a client connects, it queries the server for its available tools, data resources, and prompts. This dynamic discovery ensures real-time adaptability and modular scaling.

Tools

Executable actions that the AI model can invoke—such as creating Jira tickets, sending emails, or executing scripts. Each tool operates with defined input/output schemas and permission scopes.

Resources

Structured data sources—files, APIs, databases—that supply real-time context to models. The server manages querying, caching, and transformation layers to ensure efficiency and compliance.

Prompts & Roots

Reusable prompt templates or workflow roots guide LLM reasoning. These support multi-step orchestration such as “analyze → plan → execute → report”.

Transport & Security Layers

MCP servers must implement:

- Strong authentication (OAuth 2.0, tokens, or service identity)

- Fine-grained authorization and audit logging

- Isolation between agents and data domains

- Support for encrypted streaming via HTTP + SSE

Deployment Variants

Servers can be deployed:

- On-premises — for sensitive or regulated data.

- In the cloud — for scalability and elasticity.

- At the edge — for latency-sensitive or IoT workloads.

Recent Innovations (2024 – 2025)

- Enterprise SDKs now available in Python, TypeScript, Java, and Rust for faster server development.

- Managed Cloud MCP Servers (e.g., from Microsoft Azure) integrate directly with identity and policy services.

- Edge/Hybrid Implementations enable local decision-making with synchronized context from central models.

- Multi-Agent Orchestration allows several AI agents to chain actions across multiple servers and workflows.

- Security Tooling such as policy validators, prompt-injection filters, and role-aware sandboxes are maturing rapidly.

Business Implications for Executives

Opportunities

- Rapid AI Integration – Seamlessly connect AI to existing systems.

- Reusable Tooling – Build once, reuse across models and teams.

- Actionable Context – Empower AI to not just respond, but act.

- Adaptive Architecture – Add new capabilities without redesign.

Risks & Challenges

- Security Exposure – Misconfigured servers may allow data leakage or unintended actions.

- Operational Governance – Requires clear ownership, auditing, and lifecycle management.

- Interoperability Maturity – Standards are evolving; version compatibility must be tracked.

- Skill Gaps – Engineers must understand both LLM and integration domains.

Key Questions for Decision-Makers

- Scope – Which systems or APIs will the MCP server expose?

- Security – How are authentication, authorization, and auditing enforced?

- Deployment – What infrastructure model fits your compliance profile?

- Lifecycle – How are versioning, discovery, and capability updates handled?

- Workflow Integration – Can your AI agents coordinate multi-step operations through these servers?

- Vendor Neutrality – Are your MCP servers open-standard and model-agnostic?

Conclusion

The shift from static chatbots to dynamic, action-capable AI agents demands an equally dynamic integration layer. MCP servers are that layer—standardized, scalable, and secure.

For executives, they represent an opportunity to unify AI capabilities across the enterprise while maintaining governance and control. Those who architect early with MCP will be positioned to operationalize AI faster, safer, and with a sustainable competitive edge.